ACI-REF

Advanced CyberInfrastructure - Research and Education Facilitators

The ACI-REF consortium includes six institutions that embrace the condominium computing model. We are dedicated to forging a nationwide alliance of educators to empower local campus researchers to be more effective users of advanced cyberinfrastructure (ACI). In particular, we seek to work with the “academic missing middle” of ACI users—those scholars and faculty members who traditionally have not benefited from the power of massively scaled cluster computing but who recognize that their research requires access to more compute power than can be provided by their desktop machines.

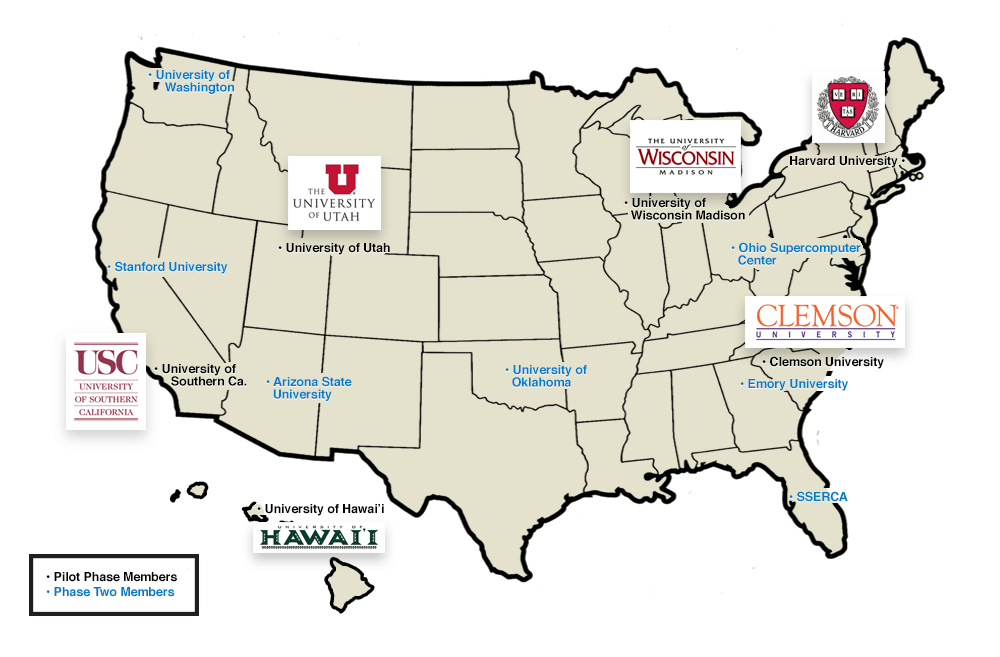

Members of the ACI-REF Program

The ACIREF program funds two facilitators at each of these six institutions. The program is dedicated to forging a nationwide alliance of educators to empower local campus researchers to be more effective users of advanced cyberinfrastructure (ACI). In particular, we seek to work with the “academic missing middle” of ACI users—those scholars and faculty members who traditionally have not benefited from the power of massively scaled cluster computing but who recognize that their research requires access to more compute power than can be provided by their desktop machines.

The program has identified the following five goals for the program:

- Enable scientific and research outcomes that would not be possible without the project and ACI-REF community.

-

Create a community that fosters mentorship and sharing of expertise at individual (ACI-REF to researcher) and campus (institution to institution) levels to help others advance their capabilities and outcomes.

-

Develop a framework for sharing best practices and lessons learned for facilitation of interactions with researchers that can be deployed at other campuses.

-

Increase the breadth and depth of interactions with researchers on campuses in various disciplines.

-

Enable researchers to be more competitive for external research funding and to foster new collaborations among researchers, campuses, and other organizations.

To work towards these goals, the facilitators have regular calls and hold Face-to-Face meetings. In addition, we are participating in various computing workshops and conferences, including XSede14 (July 2014; poster presented), Internet2 2014 Technology Exchange (October 2014; panel), and SuperComputing 14 (November 2014; panel). Along with this, we are also participating in domain specific conferences when appropriate.

More details on the program are available at the ACIREF website

ACIREF Facilitators at the University of Utah

At the University of Utah, we have chosen to partially fund four CHPC staff to be facilitators in this program. The four staff, along with a short description of their background, expertise and roles in the program, are given below:

| Name | ACIREF Disciplines | Education | Biographical Narrative |

|---|---|---|---|

| Wim Cardoen |

Python, Hadoop, MPI, Java, C++ |

PhD in Physical Chemistry | Wim R. Cardoen has been working at the Center for High Performance Computing for over five years as a Staff Scientist. He is a computational chemist by training. In that role he has developed (paralllel) electronic structure code as well as molecular dynamic code (classical & quantum). Lately, he has been working on a Big Data Project in the field of bioinformatics which is based on a Hadoop framework. He is also involved in the parallel implementation of a code which studies transit planning. Along with these projects, Wim also does software installations, along with providing user training and support. |

| Martin Cuma |

MPI/OpenMP programming, OpenACC GPU programming, Matlab, Geophysics |

PhD in Physical Chemistry | Martin Cuma is a scientific application consultant at the Center for High Performance Computing (CHPC) at the University of Utah, advising the University researchers on deployment of their applications on the CHPC computers. A computational chemist by training, Martin has advised numerous researchers over his 15 years at CHPC and over the last 8 years focused on collaboration with the Consortium for Electromagnetic Modeling and Inversion (CEMI) at the Department of Geology and Geophysics. In his research with the CEMI, he focuses on development of highly parallel geophysical modeling and inversion methods. Martin also manages CHPC satellite location for the XSEDE virtual education classes and teaches short courses on parallel programming to CHPC users. |

| Anita Orendt |

Computational Chemistry Applications |

PhD in Physical Chemistry | Anita Orendt has been a Staff Scientist for Molecular Sciences at Utah's Center for

High Performance Computing (CHPC) since 1999, and in Fall 2013 she became CHPC’s Assistant

Director for Research Consulting & Faculty Engagement. In this role she oversees

the faculty collaboration efforts of all CHPC staff members and acts as the point

person for communication with the CHPC user community. With a degree in Physical

Chemistry, she consults and collaborates with various research groups on the use of

molecular science applications, along with doing software installations and providing

general user support. Anita also is a XSEDE Campus Champion for the University of

Utah. |

Collaborative Projects

Optimization of File Transfer from NCBI to Utah and between ACIREF schools

As part of the ACI-REF program, CHPC systems and networking staff have been working with their counterparts at Clemson, including Clemson researcher Alex Feltus, on the configuration and optimization of data transfer from NIH’s National Center for Biotechnology Information (NCBI) to Utah as well as between Utah and Clemson. The other ACIREF schools are also doing similar experimentation. So that the results can be compared, all schools are working with the same 12TB data set, and using the same workflow (cURL and Aspera transfer clients).

Preliminary results for a transfer from NCBI to CHPC file systems have been very promising. By optimizing and parallelizing the flow, we have been able to download this test data set in 2.5 hrs (the transfer starts at 19:00 on graph below and rates decrease as individual flows finish), achieving average transfer rates between 10 gb/s and 14 gb/s.

These initial tests were all performed over our standard Internet2 paths. Plans to run these same tests over a software defined network (SDN), which should increase transfer rates even more, are in the works.